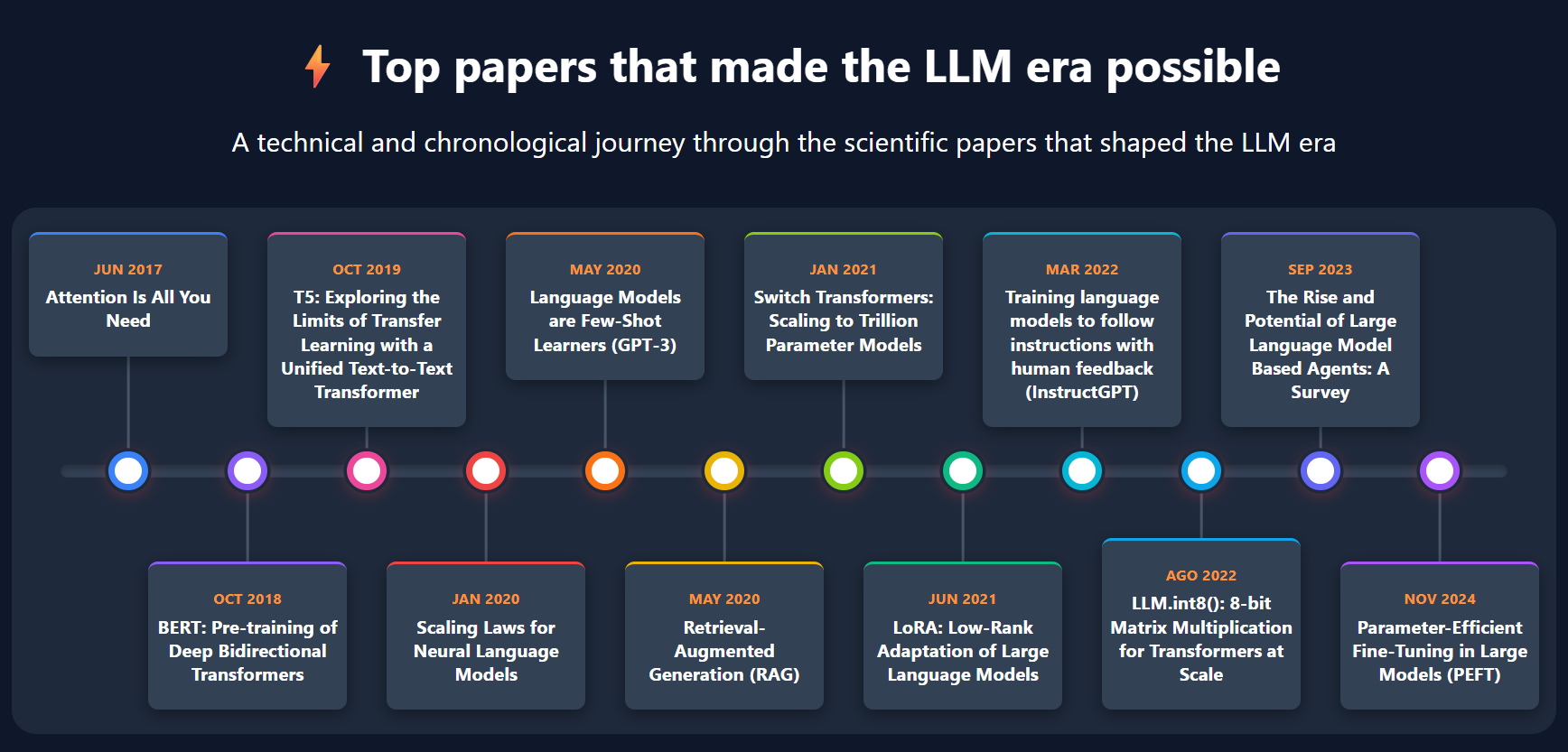

Top papers that made the LLM era possible

Throughout history, major technological breakthroughs tend to follow a clear pattern: first, invention, born from research; then industrialization, which makes the idea scalable and useful; then optimization, which perfects it and reduces costs. This cycle can be seen in different moments in history such as penicillin, the steam engine, or the microprocessor. Scientific advances that, after their appearance, evolved from a scientific discovery into widely used solutions.

The recent evolution of LLMs (Large Language Models) clearly fits this pattern. First came the invention, with research that laid the foundations — such as the Transformer architecture in 2017—. Then came the industrialization, with increasingly larger and more powerful models, and later, optimization, which reduced costs and expanded capabilities. We are now living a stage of democratization, in which these technologies are opening up to companies and users around the world (in the developed world).

In this article I will present (in my opinion and based on my knowledge) the key scientific papers that have driven each stage of development, showing how research has been the engine that has taken LLMs to their current expansion.

🧠 1. Invention (2017-2019) – Transformers, the foundation of the revolution

In 1928, when Alexander Fleming discovered penicillin, he did not single-handedly cure every disease, but he laid the scientific foundations of modern antibiotic medicine, which over time would save hundreds of millions of lives.

Something similar —although in a very different field— happened in 2017 with the publication of Attention Is All You Need, Google’s paper that introduced Transformers, a new neural network architecture that established the scientific base on which the entire LLM revolution has been built.

📄 Attention Is All You Need – June 2017

📎 https://arxiv.org/abs/1706.03762

This paper introduced the Transformer architecture, a much more computationally efficient and more accurate approach than the sequential models (RNN and LSTM) used up to that point. Its great innovation was the use of attention mechanisms, which make it possible to process text in parallel and capture more deeply the semantic relationships between words and phrases. This capability not only improved performance in language understanding and generation tasks, but also enabled scalability, allowing larger models to be trained more efficiently.

📄 BERT: Pre-training of Deep Bidirectional Transformers – October 2018

📎 https://arxiv.org/abs/1810.04805

BERT (Bidirectional Encoder Representations from Transformers) is a language model developed by Google. Before it appeared, most models read text in a single direction —left to right or right to left— which limited their ability to understand the full context of a sentence.

BERT changed this approach by introducing a bidirectional model, capable of “reading” simultaneously in both directions, thus better understanding the meaning of each word according to its context.

The paper also proposed a method to pre-train the model on large volumes of text (as if someone were being taught to read) and then easily fine-tune it for different tasks (as if someone who can read uses reading to learn how to solve “something”): text classification, sentiment analysis, or question answering. Thanks to this strategy, the same model could be reused across multiple applications.

📄 T5: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer – October 2019

📎 https://arxiv.org/abs/1910.10683

T5 (Text-to-Text Transfer Transformer) is a model developed by Google that took the idea one step further. While many previous models required different architectures for each task —for example, one for classifying text, another for answering questions, another for translating— T5 proposed unifying everything into a single “text-to-text” framework.

The idea was simple: any language problem could be framed as generating text from other text. For example, if the task was translation, you would provide a text and ask for its translation; if it was question answering, you would provide the question and it would have to generate the answer, etc.

This approach greatly simplified the architecture and made it much easier to reuse a single model for multiple tasks, anticipating the logic of today’s LLMs: a single general-purpose model that can adapt to different uses with minimal adjustments.

🚀 2. Industrialization (2020-2021) – The great expansion of AI

When James Watt improved the steam engine in 1769, he did not invent thermal energy or steam, but he managed to scale that technology and turn it into the engine of an entire industrial revolution.

Something similar happened with LLMs starting in 2020. Transformers already existed, but it was in this stage that research focused on how to make them grow, training them with enormous volumes of data and large computational resources, showing that as their size increased, so did their capability. This is the stage in which LLMs stopped being experimental prototypes and became global technological infrastructure.

Below, we’ll look at some of the key papers that marked this leap and defined this new stage:

📄 Scaling Laws for Neural Language Models – January 2020

📎 https://arxiv.org/abs/2001.08361

This paper, developed by OpenAI, formulated the Scaling Laws, showing that the performance of language models improves as their size, the amount of training data, and compute increase. This finding provided a solid empirical foundation that not only justified the push toward ever-larger models, but also set a roadmap for the entire industry. For OpenAI, it represented a strategic inflection point, as it helped attract large-scale investment and laid the groundwork for the development of GPT-3 and, later, ChatGPT, driving a new phase in the history of generative AI.

📄 Language Models are Few-Shot Learners (GPT-3) – May 2020

📎 https://arxiv.org/abs/2005.14165

The GPT-3 paper, developed by OpenAI, showed for the first time that a language model —with 175 billion parameters— could perform multiple tasks without needing to be retrained, simply from a few examples included in the prompt. This phenomenon, known as “few-shot learning”, demonstrated that as model size increases, models also gain the ability to adapt to different tasks, showing skills for which they had not been trained directly.

This discovery was a turning point in the evolution of LLMs, laying the conceptual foundations of what would later become ChatGPT.

📄 Retrieval-Augmented Generation (RAG) – May 2020

📎 https://arxiv.org/abs/2005.11401

The RAG paper, developed by Meta AI, introduced a key idea: combining language models with external sources of information. Until that point, LLMs could only generate answers based on what they learned during training, effectively “freezing” their knowledge to the data seen in that stage.

RAG proposed a different approach: allowing the model to “consult” external knowledge bases in real time, retrieving relevant documents to use as input (prompting) and generating an informed answer.

Imagine an LLM was trained with documents produced up to January 1, 2025, including the official football rulebook. If a new version of the rules is published on January 15 with new or modified regulations, an RAG-based system could incorporate that new text into an external database and answer questions according to the updated rules, without having to retrain the model (which is extremely expensive).

In this way, the model not only “remembers,” but also “searches” (through the use of embeddings), improving accuracy and keeping information up to date. This approach marked a fundamental step toward modern systems that integrate LLMs with search engines, databases, or external APIs, paving the way for assistants that combine linguistic reasoning with dynamic access to information.

📄 Switch Transformers: Scaling to Trillion Parameter Models – January 2021

📎 https://arxiv.org/abs/2101.03961

The Switch Transformers paper, developed by Google, tackled one of the major challenges in LLMs: the enormous computational cost of training. As models grew in size, training them became increasingly expensive and slow. This work proposed an ingenious solution: activating only a specific part of the model instead of using it in full.

The idea is based on a mixture of experts architecture, where the model contains multiple specialized “blocks” or neural sub-networks, activating only the most relevant ones for each input. Thus, even if the full model has trillions of parameters, only a fraction is used per forward pass, drastically reducing compute consumption without sacrificing accuracy or quality.

Imagine a large company with thousands of employees: instead of gathering everyone for every decision, you consult only the people who know the topic best. The model works the same way, dynamically selecting the most suitable “experts” (neural sub-networks) based on the input text.

This approach made it possible to train and run models far more efficiently and sustainably, marking a before-and-after in LLM design. It also introduced a principle that remains key today: it’s not only about building bigger models, but also about making them smarter in how they use their resources.

📄 LoRA: Low-Rank Adaptation of Large Language Models – June 2021

📎 https://arxiv.org/abs/2106.09685

LoRA, developed by Microsoft, proposed a revolutionary way to adapt already-trained LLMs without having to retrain them end-to-end. Until then, retraining an LLM meant updating all of its parameters, which was extremely expensive.

LoRA offered an elegant solution: keep the original model frozen and add small trainable layers within its architecture. These layers learn from the new training data, and their outputs are combined with those of the original model during inference, allowing customization without altering the general knowledge.

In other words, LoRA showed that it wasn’t necessary to retrain the whole model to teach it something new; it was enough to add small, lightweight, and efficient adaptations. This approach dramatically reduced costs and simplified LLM adaptation, making it possible for smaller organizations to customize LLMs without incurring major expenses. In practice, LoRA democratized fine-tuning and opened the door to a new generation of more specialized and accessible models.

With advances such as Scaling Laws, GPT-3, RAG, Switch Transformer, and LoRA, LLMs reached the level of maturity needed to jump into real products. We see this in tools like ChatGPT, Claude, DeepSeek, GitHub Copilot, etc., which have already been integrated into our day-to-day lives.

🛠 3. Optimization (2022 – 2024) — Improved and made cheaper

In 1947, Bardeen, Brattain, and Shockley invented the transistor, forming the technological foundation on which modern microprocessors were built. That innovation laid the groundwork for the creation of the Intel 4004 in 1971, the first microprocessor with 2,300 integrated transistors. Since then, the history of computing has been a race to optimize and reduce costs, making chips increasingly powerful, compact, and accessible, leading to processors like the Intel Core i7 that integrate billions of transistors.

Something similar happened once tools based on LLMs had been built. The challenge became improving them and making them more efficient and useful for people. Between 2022 and 2024, research focused on three fronts: improving model efficiency through optimization techniques, giving them greater functionality with reasoning capabilities and the use of external tools, and achieving better interaction with people.

The result was a qualitative leap: LLMs stopped being limited to generating text and became useful, accurate, and collaborative systems. Let’s look at some of the key papers:

📄 LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale – August 2022

📎 https://arxiv.org/abs/2208.07339

This paper introduced a key technique in the area of quantization to reduce the size and computational cost of LLMs. Quantization consists of reducing the numerical precision with which models store and process their parameters —that is, using numbers with fewer decimal places—. This way, values take up less memory and operations run much faster. The challenge is that, as precision is reduced, models tend to lose accuracy and quality in their outputs.

LLM.int8() showed that it was possible to apply 8-bit quantization without significantly degrading performance, even in models with billions of parameters. Its innovation was a hybrid quantization strategy that used 8 bits for most computations and 16 bits only in cases with atypical activations (outliers), where numerical values are especially large.

This approach cut memory usage by roughly half and made it possible to run large models on a single GPU, enabling high-scale LLM work in a much more accessible and cost-effective way.

Overall, LLM.int8() became a benchmark in model optimization, showing that progress does not always depend on building bigger models, but on making them more efficient and smarter in their use of resources.

📄 Training language models to follow instructions with human feedback (InstructGPT) – March 2022

📎 https://arxiv.org/abs/2203.02155

In this paper, OpenAI transformed the way language models relate to people. The work focused on making LLMs provide answers more like those a human would give, introducing the method Reinforcement Learning from Human Feedback (RLHF): a process in which human evaluators rate and compare the model’s answers, providing examples of how it should behave under different instructions.

From that feedback, the model learns to prioritize clearer, safer, and more useful responses, adjusting its behavior toward what people actually expect. The result was a model that not only predicts words, but understands and follows instructions (Instruct) coherently.

InstructGPT showed that optimizing LLMs depends not only on technical efficiency, but also on their ability to communicate and interact more naturally with people, making AI more understandable, cooperative, and human-like.

📄 Parameter-Efficient Fine-Tuning in Large Models (PEFT) – November 2024

📎 https://arxiv.org/html/2410.19878v2

This survey (a review of different research papers) compiles and analyzes the main techniques for Parameter-Efficient Fine-Tuning (PEFT). These techniques emerged to address a key problem: how to adapt LLMs without retraining all of their parameters, which is extremely costly in time and resources.

The study brings together methods such as LoRA, Prompt Tuning, Prefix-Tuning, and P-Tuning, all with a common goal: customize a large model by modifying only a small fraction of its parameters. Thanks to these approaches, LLMs can solve new tasks —such as text classification, sentiment analysis, or code generation— quickly and efficiently, without losing the general knowledge learned previously.

Overall, this work consolidated an essential research area for the practical optimization of LLMs, making their adaptation flexible, modular, and affordable, enabling more organizations to leverage their potential without relying on large infrastructures.

📄 The Rise and Potential of Large Language Model Based Agents: A Survey – September 2023

📎 https://arxiv.org/abs/2309.07864

This survey analyzes the rise of language agents (LLM Agents), a new generation of LLM-based systems that not only generate text, but also reason, plan, and act autonomously. Agents are composed of several elements that allow them to interact more intelligently with their environment:

- Core model (LLM): the nucleus that interprets instructions and generates reasoning or plans.

- Memory: stores information from past interactions to maintain context and coherence.

- External tools: allow the agent to access search engines, databases, or APIs to obtain information or execute actions.

- Planner: breaks down a complex goal into logical steps and decides when and which actions to take.

Thanks to this modular structure, LLM agents can solve complex tasks, learn from context, and execute actions in real time. The study consolidated the concept of the “agent” within the LLM ecosystem and marked a key step toward more autonomous and practical systems.

Although today the term “intelligent agent” may sound modern, its origins go back to the 1980s within the field of artificial intelligence. As an anecdote from the author of these lines, I took a university course called Intelligent Agents in the 2009/2010 academic year. I remember perfectly that on January 27, 2010, the professor canceled the class for that course because it coincided with an event that would later prove “historic”: Steve Jobs’ presentation of the iPad.

With the advances shown, LLMs reached a new level of maturity. It was no longer only about making them bigger, but about making them more useful, faster, and more efficient. This phase consolidated the technical foundation on which today’s most advanced models are built, paving the way for their expansion and global adoption.

🤝 4. Democratization (2023 – 2025) — LLMs open up to the world

In February 2023, Meta AI released LLaMA, its LLM model. With this decision, language models stopped being a technology reserved for large companies and became an open and collaborative resource.

Other companies followed its example: Mistral AI with Mistral 7B (September 2023), Google with Gemma (February 2024), or DeepSeek AI with DeepSeek-V2 (May 2024), among others. Some released only the model, and others also released its source code, under more or less restrictive licenses, but all contributed to expanding access and experimentation.

The release of these models triggered a true revolution, enabling developers and organizations to adapt them to areas as diverse as education, medicine, or law. Platforms like Hugging Face also played a key role by facilitating the sharing of trained and specialized models, consolidating themselves as the major meeting point of the open-source ecosystem. In this way, an open and collaborative environment emerged, where innovation no longer depends only on large corporations, but also on a global community that experiments, improves, and shares knowledge.

Below, we show the “technical reports” and information for the open models from Meta, Mistral, and DeepSeek:

📄 LLaMA – Meta AI (2023-2025)

- LLaMA (February 2023) 📎 https://arxiv.org/abs/2302.13971: First LLaMA model, designed with public data and high efficiency. Its release allowed the scientific community to access, for the first time, a competitive model developed by a large company.

- LLaMA 2 (July 2023) 📎 https://arxiv.org/abs/2307.09288: Second generation of LLaMA, which included conversational versions (chat models) and licenses that even allowed commercial use, greatly expanding its adoption.

- LLaMA 3 (July 2024) 📎 https://arxiv.org/abs/2407.21783: Third generation with improvements in performance, alignment, and scalability. It consolidated LLaMA as one of the pillars of the open LLM ecosystem.

- LLaMA 4 (April 2025) 📎 https://ai.meta.com/blog/llama-4-multimodal-intelligence/: Fourth generation introducing a Mixture-of-Experts (MoE) architecture, multimodal capability (text, image, video), and ultra-long contexts.

📄 Mistral AI (2023–2025)

A European company that became one of the leading drivers of open models, betting on efficiency and accessibility.

- Mistral 7B (September 2023) 📎 https://arxiv.org/abs/2310.06825: A 7-billion-parameter model under the Apache 2.0 license. Its optimized architecture (grouped-query attention and sliding-window attention) made it stand out against larger models.

- Mixtral 8×7B (December 2023): A Mixture of Experts (MoE) model that combines eight 7B experts, activating only those needed for each task, improving efficiency without losing quality.

- Voxtral Technical Report (July 2025): Extends the focus toward speech understanding and multimodal models.

📄 DeepSeek AI – (2024-2025)

From China, DeepSeek AI emerged as one of the most solid and ambitious open-source projects, standing out for its efficiency and specialization.

- DeepSeek-Coder (January 2024) 📎 https://arxiv.org/abs/2401.14196: A model specialized in programming and code generation, trained on a massive dataset of public repositories.

- DeepSeek-V2 (May 2024) 📎 https://arxiv.org/abs/2405.04434: Introduces a Mixture of Experts architecture with “Multi-Head Latent Attention” and DeepSeekMoE, optimizing performance and computational cost.

- DeepSeek-R1 (January 2025) 📎 https://arxiv.org/abs/2501.12948: Explores logical reasoning with a technique called Reinforcement Learning with Reasoning Feedbacks (RLRF), which encourages more structured steps of thought.

In just a few years, LLMs have gone from being an academic experiment to becoming one of the biggest technological transformations of our time. From their invention with the Transformer to their opening to the public with projects like LLaMA, Mistral, or DeepSeek, AI has evolved from the lab to everyday use, changing the way we learn, work, and create.

Today, LLMs represent much more than a tool: they are a new language between humans and machines, an open space where innovation emerges from global collaboration.

List of the papers mentioned in the article in chronological order:

- ➡️ Attention Is All You Need – Jun 2017 📎 https://arxiv.org/abs/1706.03762

- ➡️ BERT: Pre-training of Deep Bidirectional Transformers – Oct 2018 📎 https://arxiv.org/abs/1810.04805

- ➡️ T5: Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer – Oct 2019 📎 https://arxiv.org/abs/1910.10683

- ➡️ Scaling Laws for Neural Language Models – Jan 2020 📎 https://arxiv.org/abs/2001.08361

- ➡️ Language Models are Few-Shot Learners (GPT-3) – May 2020 📎 https://arxiv.org/abs/2005.14165

- ➡️ Retrieval-Augmented Generation (RAG) – May 2020 📎 https://arxiv.org/abs/2005.11401

- ➡️ Switch Transformers: Scaling to Trillion Parameter Models – Jan 2021 📎 https://arxiv.org/abs/2101.03961

- ➡️ LoRA: Low-Rank Adaptation of Large Language Models – Jun 2021 📎 https://arxiv.org/abs/2106.09685

- ➡️ Training language models to follow instructions with human feedback (InstructGPT) – Mar 2022 📎 https://arxiv.org/abs/2203.02155

- ➡️ LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale – Aug 2022 📎 https://arxiv.org/abs/2208.07339

- ➡️ The Rise and Potential of Large Language Model Based Agents: A Survey – Sep 2023 📎 https://arxiv.org/abs/2309.07864

- ➡️ Parameter-Efficient Fine-Tuning in Large Models (PEFT) – Nov 2024 📎 https://arxiv.org/html/2410.19878v2